The link below takes you to a number of interviews and case studies of ongoing film and television projects, with emphasis on the production design and the virtual world in filmmaking.

Posted by

judy cosgrove

on Friday, May 22, 2020

"Game-changing technological advances over the years have altered and expanded the the trajectory of filmmaking." says Noah Kadner author of the "The Virtual Production Field Guide" presented by Epic Games.

"For filmmakers the uncertainty of traditional pre-production and visual effects from production are replaced with working imagery far closer to the final pixel. And because this high-quality imagery is produced via a real-time engine, iteration and experimentation are simplified, cost-efficient and agile...Creative decisions about shots and sequences can be resolved much earlier in production, when the entire team is present, and not left to the last minute of post-production when crews have long since disbanded." Link to full document: "The Virtual Production Field Guide"

This puts the onus on the Production Designer and Art Department to take the digital reigns to design and create digital worlds that filmmakers can work in. And it's getting easier!

Epic Games provides a video demo posted May 2020:

"Unreal Engine 5 empowers artists to achieve unprecedented levels of detail and interactivity, and brings these capabilities within practical reach of teams of all sizes through highly productive tools and content libraries."

Unreal Engine 5 Revealed! | Next-Gen Real-Time Demo Running on PlayStation 5

Posted by

judy cosgrove

on Tuesday, December 31, 2013

2013 has been a busy year - and it is almost one year ago to the day since my last post!

I am constantly learning about new production processes as a member of the Virtual Production Committee. Chaired by David Morin and Co-Chaired by John Scheele this group is a joint technology subcommittee of the following organizations :

Here is my report for VPC Meeting no. 8 that was held on October 3rd, 2013 at Warner Bros Studios:

CASE STUDY: The Making of “Gravity”

Chris DeFaria, Executive VP Digital Production and Animation

at WB and our host for the evening, told of how 4 years ago when introduced to Gravity, he suggested to director Alfonso Cuaron a compositing technique developed by Alex Bixnell, VFX Supervisor

on Little

Man, in which they shot the head of the character independently of the body

and comped them together in a low-tech way, but for Gravity only the faces

would be real and the rest of the movie animated.

Alfonso had already created a 7½-minute pitchvis for

Universal with previs artist Vincent Aupetit, formerly of Framestore. The

project was deemed cost-prohibitive by Universal, but this pitchvis and

DeFaria’s confidence in coming up with an affordable solution got the production

green lit at Warner Brothers. DeFaria

credited William Sargent of Framestore for

undertaking the pitch from the beginning and brought Framestore on board to

lead the team(s).

He presented the 16-minute long opening shot of the film

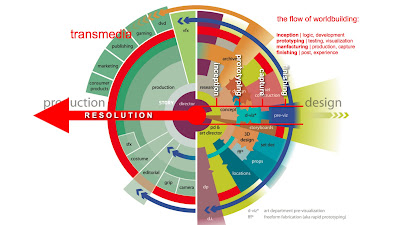

that gave the sense of the movie, and added that the concentric graphic (from 5D- see below) shown by David Morin

during his opening remarks, is very relevant. For Gravity, all phases of production—previs, postvis,

editorial, even script were concurrent, intersecting and informing each other,

unlike any film he had ever worked on.

|

| Production Design Mandala |

David next introduced Chris Watts, VFX Supervisor, also

brought on by Chris DeFaria, as a consultant to work with Framestore in exploring

techniques to achieve total realism for the zero gravity shots.

Watts showed short clip from the voluminous NASA footage provided

by WB for reference. He noted several key challenges; one would be to get the

lighting right – there is no atmospheric perspective in outer space. Photographic

tricks such as scale do not work. Another challenge was to figure out a way to

free up the actor from cables and uncomfortable rigging so they could focus on

acting. Actors on cables are very difficult to shoot which would be compounded

by the length of the shots that the director was looking for.

Alfonso initially wanted the capability to re-light and

re-photograph the actors in post, which would require doing 3D performance

capture, so they tested a technique using the Light Stage developed by Paul

Debevec at ICT.

|

| ICT Light Stage |

The results were very good, but the shoot was problematic.

They were able to shoot only 5 minutes a day, creating a lot of footage that took

4 hours to download. In addition, Alfonso was not comfortable shooting in a

dome of blinking lights. Alfonso decided he must commit to shooting one angle

and lighting one way, meaning he would need to previs the entire movie.

Watts knew their approach would be to shoot some form of

stationary person with a moving camera, but he was not a fan of motion control,

since it is not an easy process for zero gravity performance. Then Chris DeFaria forwarded him an article in

Wired Magazine about Bot & Dolly

(formerly Autofuss), a San Francisco based company that was repurposing

automotive assembly robots to shoot movies.

Watts contacted Bot & Dolly Creative Director Jeff

Linnell, who sent him a clip of the robots in action proving they had the

technology needed to get the movie made.

He went with Chivo, the DP for Gravity, to San Francisco for a tour and demo.

Watts told how he gave the B&D team a

Maya file of the previs before lunch, and to his surprise, by the time they

came back, found the B&D crew ready to shoot a test.

For the test an actress stood in front of green screen on a

plexi block and shifted in mid-air, grabbing onto invisible weightless objects.

The camera, lights and reflectors, all mounted on robots, moved in sync,

orbiting the actress as she moved and turned.

The objects she was reaching for would be CG and comped later in post,

but she was live-comped on set directly into the previs Maya file. Seeing the live-comp convinced Chivo, Alfonso

and Chris DeFaria that this was the way to go.

Jeff Linnell, Creative Director at B&D spoke next about

the tools they created for Gravity. The B&D team spent a year doing

software development for the motion control rig. Linnell emphasized the

democratization of their system. It was not practical to have proprietary

software that only his team could program. They developed Move software that sits on top of Maya. Anyone who can operate Maya can operate the

robot. Move enables a robot in the

real world to be moved in Maya, and conversely, the robot can be moved in the

real world and that movement recorded back into Maya.

This standardized tool optimized collaboration by allowing the team to operate the robot from their Ipads. Framestore was able to animate all the camerawork for the movie in advance, while the B&D team validated the physics on set. Every shot planned in previs was achieved. The toolset they created allowed flexibility and creative control that would not compromise the demands of the most particular director or cinematographer.

The robot on Gravity was a 6-axis industrial arm capable of lifting

300 lbs. and moving at 4m/s. It was

mounted on a 7th axis track and had a pan-tilt roll head attached to

it (for 10-axises) Additionally, the actors were in a gimble rig that was also

on a pan-tilt roll axis, totaling a 13-axis robot on set. . Precision was paramount. The repeatability is

.05mm in accuracy at full speed. Every shot was a synchronized programing of

movement of actors, camera and lights.

|

| LED Light Box with Bot & Dolly Camera Rig |

David then introduced Chris Edwards, CEO of Third Floor Inc.

the company responsible for the entire previs of Gravity. Edwards noted “Gravity

is an auteur film created by a director who envisioned the script in three

weeks by writing it down, and he wanted to complement the story with very

innovative, almost revolutionary camera work.“ Alfonso wanted extremely long

shots that ended up on average 12 minutes long. Most films are over 2000 shots. Gravity was 192

shots total. Within the first 30 minutes of the movie there are only 3 seamless

cuts. And he wanted a high degree of accuracy regarding the geography of the

earth and its orbit.

Edwards showed the original pitchvis (one continuous shot) Alfonso

had created 5 years ago for Universal by Vincent Aupetit, now Previs Supervisor

at Third Floor’s new facility in London.

Third Floor worked another 11 months in collaboration with

Alfonso and Framestore to create the previs Maya files that Chris Watts later

took to Bot & Dolly. Edwards

described how Alfonso developed a close working relationship with each previs

artist, using physical models and lipstick camera to explore shots and encourage

collaboration. These meetings got into so much detail that they were video

recorded as a way to keep everyone involved on track with his vision.

All 192 shots were first conceived in previs as a hand-animated

creative pass to get a clear idea of all the elements in a scene. For many of

the shots they also did a simulated pass in Motion Builder. They took advantage of the Motion Builder’s

physics engine feature and by changing some of code, allowed the animator to

fire the jet packs with an xbox controller and control the tethering.

The Art Department, led by Production Designer Andy

Nicholson, generated 3D set models for use in previs. They worked without direct assistance from NASA, were able to be very

faithful to the research found on the internet. In addition to 3D set designs, concept art, key frames, and 3D set dressing, they provided infinite image and video-based detail early

on that aided the previs, including textures, movement of fabrics, and studies of weightlessness.

Edwards noted the advance in virtual lighting. The previs files

were passed over to Framestore where DP Chivo hand-lit every shot, which drove

the lighting decisions on set. He

acknowledged how Framestore pushed the limits of their resources with great

dedication to make the film possible.

Chris Lawrence, Framestore CG Supervisor on Gravity skyped

in from London. He worked on the project the whole way though to delivery.

Echoing Chris DeFaria and Chris Edwards, Lawrence credited the success of this

production in part to William Sargent’s investment in the project and for

having fostered the creative collaboration between all departments from within

Framestore.

He noted how having the Art Department working in house during

the previs phase afforded them the opportunity to integrate fully designed

assets for a more complete vision very early in the production. And because all departments were digital and

could access data storage at Framestore, the iterative nature of the process

was enhanced.

He mentioned the special effects harness rigs they developed

for Sandra Bullock to aid her performance of weightlessness.

He explained how VFX Supervisor Tim Webber combined 3 shoot

methods: traditional camera and crane with traditional lighting, motion control

camera and LED box, and a motion control wire rig and motion control

camera. These 3 methods were easily

blended together because of the problem solving they did through previs. The

work they did with Chivo to figure out the lighting in previs also paid off in

the end when it came time to reassemble and comp the CG bodies with the real

faces.

Lawrence presented a short video that elaborated on the LightBox

and Tim Webber’s idea of projecting a virtual environment on the LED screens

surrounding the actors. They provided an animated version of the movie as seen

from the actor’s point of view. This visual information was low res, but enough

to give the actors a feeling of where they were in space, helping them to do

their job. At the same time it was a dynamic lighting source that gave subtleties

in color, atmosphere and reflection for every moment of every shot in the LightBox,

making the film feel that much more real.

Q&A

What was the

resolution of the 6 panels in the LightBox?

Each panel was made up of several 5” square tiles @ 64

pixels x 64 pixels

It was low res but a lot of effort went into the of color

calibration of the panels.

Was there any digital

facial performance or digital doubles?

Yes, we worked with a company called Mova that

specializes in capturing facial geometry. We had a rig with genesis cameras to

augment with textures that could be projected onto the geometry.

Can you talk about

safety features of the robots?

We had numerous layers of safety protocols on set that started

in previs.

We ran the robots on the set without a human at variable speed,

and then did the same with a stunt double.

Health and Safety had to sign off on every shot and there was an onset

protocol for that. We also had safety

operators in place, if struck, they could stop the robots immediately.

What was the

hierarchy of the management structure for this film?

It was standard VFX management production structure but

larger.

The Art Dept. was right there with no physical barrier and

that aided the short deadlines.

What was the

translation of Alfonso’s use physical models and lipstick cam to the previs?

It was a visual translation used as reference footage for

the artist. The models were used throughout the whole process and were pretty

battered by the end.

How were you dealing

with scale in space?

Everything was to correct scale, in coherent 3D space. We

tracked the NASA photos and built to them. Chris DeFaria could not distinguish

the CG asset from the original photo reference.

When did you start

working with the Art Department?

It was the around the time that previs got underway till

about 3 months after the shoot. We were able to block out the world early on

with low-res schematics.

As we got a little further along the Art Dept. provided very

accurate models, which Alfonso preferred.

What kinds of motion

capture systems did you use?

Framestore purchased a Vicon stage system specifically for

Gravity.

Motion capture was used to previs some portions of the film.

We also carefully tracked the motion of the helmet with

motion capture camera attached to the lightbox. This was key to matching

subtleties of Sandra’s head to whole body performance later in animation.

Almost all of the animation is hand key-framed because it was difficult to do

motion capture of zero gravity movement.

How long did you

shoot on stage?

The live action shoot was about 3 months.

What was the

resolution of the final render? 2K or 4K?

It was 2K stereo. We invested a lot in rendering. We

converted from Pixar Renderman to Arnold (Framestore’s proprietary engine).

Chivo loved simulated bounce light and we had a lot of heavy models that added

to the render time.

What was the full budget

of the production?

Around 80 million dollars, maybe more with the reshoots.

There were a few moments in the beginning that were needed a year after the

first shoot wrapped.

How did you integrate

editorial into the process?

The Editorial Dept. was also based at Framestore and

integrated very tightly with previs. Editorial for very long shots was

convoluted so it was broken down to story beats instead of shots. Numbering the

actions gave us a way of managing it. The editorial process was very

complicated in both pre and postproduction.

Was there and edit of

previs that really was the movie?

Yes with sound and voiceover. When we got Sandra’s voiceover

the movie became real without a single shot.

How was the 3d Conversion

done?

The 3d conversion of the faces was done from a flat left

eye. Everything else in the frame was rendered in stereo.

How long was Chivo

involved in the previs phase?

Chivo is a key collaborator of Alfonso’s and brought a lot

to the table. He was not there all the time but was at Framestore for about 3

months. He came back during postproduction for some full CG scenes that were

not prelit and gave feedback for that and other stuff we did after the shoot.

How was it to have

the Director and DP with you in house for that long a time?

Did it change the way

you worked with the client?

The client relationship was sometimes a little frustrating,

but overall it was a very positive experience and we were able to do something

we would not have been able to do without that direct collaboration.

How much involvement did Sandra have in previs?

We mostly worked with Alfonso if Sandra had any notes they

came through him.

Was there Sets built

or were they all digital?

Yes the 2 smaller capsules were fully built, and there were a few proxy sets as well.

end of report.

A great in-depth discussion can be found at fxguide http://www.fxguide.com/featured/gravity/

Happy New Year!

JC

Yes the 2 smaller capsules were fully built, and there were a few proxy sets as well.

end of report.

A great in-depth discussion can be found at fxguide http://www.fxguide.com/featured/gravity/

Happy New Year!

JC

Posted by

judy cosgrove

on Wednesday, December 26, 2012

Today I came upon an excellent post about "ten upcoming technologies that may change the world" by Alvaris Falcon. Great food for thought, allowing me to catch up on all these new technologies and expand upon two of them-Google Glass or augmented reality and 3D printing or rapid prototyping based upon my exposure to them this past year.

AUGMENTED REALITY (AR)

Ten years ago the technology introduced in the film "Minority Report" stood marketing experts on their ears.

|

| Tom Cruise wearing "data gloves" in front of translucent glass in the 2002 film "Minority Report" |

"...the concept of Augmented Reality conjures up that memorable scene in Steven Spielberg’s 2002 movie Minority Report in which Tom Cruise’s character strolls through a mall while being assaulted by marketing messages of a highly personal nature. A Guinness billboard addresses him by name and tells him he could use a drink; in the Gap store, a hologram of an assistant asks him if he is enjoying his previous purchase; an American Express advert shows a giant 3D credit card embossed with his membership details. It is an unsettlingly depiction of an advertising nirvana, all made possible by the supposed existence of retinal scanners."The data glove interface depicted in the photo above is a form of virtual reality (VR). VR differs from AR in that it is an entirely virtual experience and has no anchor in the physical world. AR experiences on the other hand, use computer-enhanced glass to augment or overlap reality in real-time with sensory data or other useful information as applied to the world around us. We are familiar with the AR in this montage taken from the 1984 film "The Terminator":

"In the Terminator movies, Arnold Schwarzenegger’s character sees the world with data superimposed on his visual field—virtual captions that enhance the cyborg’s scan of a scene. In stories by the science fiction author Vernor Vinge, characters rely on electronic contact lenses, rather than smartphones or brain implants, for seamless access to information that appears right before their eyes." [Augmented Reality in a Contact Lens, by B.A. Parviz 9.30.09]

|

| Bionic Contact Lens "A Twinkle in The Eye" |

"Confusingly, both AR and Virtual Reality share key elements that allow users to experience enhanced interactions through digital and online input, and often the terms are used interchangeably: with the increasing advancements of gesture-based interfaces (think the Kinect), distinctions between Virtual Reality and AR are becoming increasingly irrelevant." [The Next Web : "How augmented reality will change the way we live" 8.25.12]

"[Google] Glass is, simply put, a computer built into the frame of a pair of glasses, and it’s the device that will make augmented reality part of our daily lives. With the half-inch (1.3 cm) display, which comes into focus when you look up and to the right, users will be able to take and share photos, video-chat, check appointments and access maps and the Web. Consumers should be able to buy Google Glass by 2014.""Sight", a short sci-fi film by Eran May-raz and Daniel Lazo (publ. 8/1/12) imagines a world in which Google Glass-inspired apps are everywhere which Forbes tech writer AW Kosner says "makes Google Glass look tame".

Beyond personal eyeglass, General Motors Corp. researchers are working on a windshield that combines lasers, infrared sensors and a camera to take what's happening on the road and enhance it, so aging drivers with vision problems are able to see a little more clearly.

Another AR application for smart windshield glass under development in the UK:

Connecting this back to the entertainment industry, John C Abell wrote an interesting article about the roots of AR in sci-fiction for Wired: [Augmented Reality's Path From Science Fiction to Future Fact 4.13.12]

"There can be a very thin line between fantasy and science. Fantasy drives science. Set aside Geordi’s visor and today’s augmented reality glasses for a moment. Instead, look at some original Star Trek episodes to see handheld, long-range wireless communication devices and voice-input and omnipresent and seemingly omnipotent computing half a century before these nonexistent technologies became things we take for granted."

Looking forward to a relevant upcoming event, "The Science of Fiction" presented by 5D Institute in association wth USC School of Cinematic Arts (April 2013) that will expand on this concept (and others) it applies to the art of production design for film, books and games.

"Science fiction prototyping, design fiction, and world building are all established narrative devices which engage the power of Fiction to grab elusive or as yet unrevealed Science and disrupt current thinking to provoke new discoveries."3D PRINTING- RAPID PROTOTYPING

Rapid prototyping technology most familiar to me before the advent of 3D printing, used in the creation of vehicles and other props for films and theme parks, is the subtractive process known as Computer Numerical Control (CNC) milling. In the entertainment industry companies such as Trans FX (TFX) used this method for creating a number of the Batmobiles currently on tour for example.

|

| Batman vehicles on tour |

|

| Artificial jawbone created by a 3D printer |

'Skyfall' filmmakers dropped some of their $150 million-plus budget on 3D-printed scale replicas of Bond's classic Aston Martin DB5.

"Voxeljet,

a 3D printing company in Germany, created three 1:3 scale models of the

rare DB5. Each model was made from 18 separate components that were

assembled much like a real

car.

The massive VX4000 printer could have cranked out a whole car, but the

parts method created models with doors and hoods that could open and

close.

The completed models received the famous DB5 chrome paint job and bullet hole details as finishing touches during final assembly at Propshop Modelmakers in the U.K. One of the models was sacrificed to the stunt gods during filming. Another was sold by Christie's for almost $100,000. " Read this article by Amanda Kooser on CNET.

3D printing for environmental miniatures is currently being developed further by VFX pioneer Doug Trumbull known for his work on 2001: A Space Odyssey. Trumbull was in Los Angeles this year to accept the Visual Effects Society's Georges Méliès Award. I had the privilege of meeting him when he also attended and spoke at the 4th meeting of the Virtual Production Committee in February 2012.

Virtual production is essentially digital compositing of 3D elements in camera (real & virtual or virtual & virtual) in real time. Doug Trumbull has been combining elements in camera since the 70's with his Magicam System.

Trumbull is a proponent of filming optical effects and prefers using real miniatures rather than CGI for compositing. He is currently expanding on his own virtual production technique at his state-of-the-art studio in Massachusetts.

At Siggraph 2012 Conference in Los Angeles this year 3DSystems showcased CUBE the first @home 3D printer. Attendees could print their own 3D files into physical objects (ABS plastic) from working machines provided at a hands-on demo booth.

The new stop-motion feature "ParaNorman" uses full-color Zprinted puppets.

Read an in-depth article by Brian Heater with great photos: How 3D Printing Changed the Face of 'ParaNorman'

Given that we already have 3D laser scanners in use and can create accurate digital files to replicate and/or design new objects with relative ease, the applications for 3D printing truly are going to change the world.

The completed models received the famous DB5 chrome paint job and bullet hole details as finishing touches during final assembly at Propshop Modelmakers in the U.K. One of the models was sacrificed to the stunt gods during filming. Another was sold by Christie's for almost $100,000. " Read this article by Amanda Kooser on CNET.

3D printing for environmental miniatures is currently being developed further by VFX pioneer Doug Trumbull known for his work on 2001: A Space Odyssey. Trumbull was in Los Angeles this year to accept the Visual Effects Society's Georges Méliès Award. I had the privilege of meeting him when he also attended and spoke at the 4th meeting of the Virtual Production Committee in February 2012.

|

| Doug Trumbull at his studio in the Berkshire Hills of Massachusetts |

"We can make miniatures look absolutely real, that isn’t a variable. I recently looked at Blade Runner, Close Encounters and 2001 in my screening room on Blu-ray, and I could see everything that was in the original prints. Sometimes it is even better, because the grain and slight weave of physical projection is gone. All these years later the miniatures hold up and are not the slightest bit obsolete due to CGI. Miniatures are used so rarely, they are practically a lost art, though Hugo shows how successfully they can still be employed."

"Most directors aren’t comfortable in a virtual world, something I found out long ago with Magicam. Many actors, having learned their craft on a near-empty theater stage, are more comfortable. And I found that showing actors the composite on stage thrills them. “Finally, I don’t have to fake it.” If you don’t have something to show them, you wind up like 300, where everybody’s faking it because they have no solid idea about the virtual environment! My next step – something I haven’t done before except in brief experiments – is to replace the computer-generated, real-time virtual set with a miniature, which I find much more photo-realistic and believable than anything generated in a computer. Then I use Nuke and other comp techniques as needed, though I’m aiming for every shot to have at least 80 percent physical reality, rather than settling for the algorithm of the month. My tastes have always run to more organic approaches to visual effects." [ICG Magazine interview: Exposure: Douglass Trumbull 4.4.12]Trumbull discusses his role in the history in filmmaking in another great interview by Wofram Hannemann in May at FMX Conference in Stuttgart Germany [in70mm.com 5.10.12]

At Siggraph 2012 Conference in Los Angeles this year 3DSystems showcased CUBE the first @home 3D printer. Attendees could print their own 3D files into physical objects (ABS plastic) from working machines provided at a hands-on demo booth.

|

| Siggraph Attendees playing with Cube printers |

The new stop-motion feature "ParaNorman" uses full-color Zprinted puppets.

|

| ParaNorman 3d printed puppet faces |

Given that we already have 3D laser scanners in use and can create accurate digital files to replicate and/or design new objects with relative ease, the applications for 3D printing truly are going to change the world.

Posted by

judy cosgrove

on Tuesday, September 18, 2012

I am intrigued by the ways designers are thinking about art and architecture as our means of graphic interface become increasingly sophisticated. Other forms of interface are becoming invisible (especially with regard to "smart buildings.") Interesting and related post I came across online today: What Comes After the Touchscreen?

5D Institute has curated a new series of discussions that will be delving into this topic later this week. All of the speakers are excellent and Kevin Slavin, Greg Lynn and Peter Frankfurt are among my favorites.

5D Institute has curated a new series of discussions that will be delving into this topic later this week. All of the speakers are excellent and Kevin Slavin, Greg Lynn and Peter Frankfurt are among my favorites.

| New City - design Greg Lynn, Peter Frankfurt, Alex McDowell; image courtesy Greg Lynn/ FORM |

When the city and the book become both virtual and interactive, and contain and fuel multiple scenarios which evolve and coexist within synthetic worlds, what new stories can we tell?

In association with USC School of Cinematic Arts, the 5D Institute invites you to join our diverse and interdisciplinary network of writers, architects, engineers and artists in a multi-panel provocative and disruptive discussion of the possibilities of dynamic environments in digital publishing, virtual architecture, and interactive media, and the role of world building in the future of storytelling.

Some of the mind-bending questions to be addressed during 2 sessions this week:

On hybrid spaces-

As information is liberated from concrete and paper how does new data transform the material from which it came?

On architect as storyteller-

Does the multi-authored narrative of the city inform our view into the future of a new kind of storytelling experience?

On new dimensions of story architecture-

Can the novelist's command of world-building be challenged and enriched by a new dimension that enables multi-authored pathways?

On creating story spaces-

In a flow towards the Virtual City and Interactive Book, what are the differences between them? How can we integrate the newly built worlds of the City and the Book; and who now are the authors of these spaces?

Posted by

judy cosgrove

on Sunday, July 29, 2012

Digital Storytelling Seminar, now in its 7th year, took place in May at the Tancred Theater at Filmens Hus in Oslo, Norway. As an active member of 5D Institute and 5D Conference, I was delighted to be able to accept an invitation to attend this partner event.

Digital Storytelling Seminar (DS) is the brainchild of 5D Conference founders Kim Baumann Larsen, Creative Director and partner at Placebo Effects, an Oslo-based VFX company, and Eric Hanson, Principal, xRex Studio and Associated Professor, USC School of Cinematic Arts. In 2005 both realized they missed attending the defunct 3D Festival in Copenhagen, an annual event said to be "Europe's leading meeting place for professionals working in digital design, filmmaking, game development and architectural design." They sought out Angela Amoroso, DS co-founder and co-director, at the Norwegian Film Institute and launched the first DS seminar and workshops in 2006, providing a new platform to discuss the advances in computer-generated imagery (CGI) in the service of visual storytelling.

DS connects and enriches digital artists and filmmakers in the Nordic community and beyond.

Each year new technologies and a wide range of projects are showcased by participants. Themes have varied, but the objective remains the same: To explore relevant and cutting edge topics that educate and inspire, leading to new levels of filmmaking excellence. This year was no exception. Interesting to note that many of the small participating companies are formed of CGI generalists, self-dubbed "Potatoes" that produce a wide array independent projects, and work co-operatively on larger projects, such as soon-to-be released Kon-Tiki, the newest Norwegian adventure film based on Norwegian explorer and writer Thor Heyerdahl and his 1947 rafting expedition across the Pacific Ocean from South America to the Polynesian Islands. This film was made mostly with Norwegian VFX talents and had a record-breaking budget. Swedish companies Fido and Very Important Pirates did a fair share of the VFX work as well.

I met Dadi Einarsson, co-founder and Creative Director of Framestore Iceland, who sought out DS to become involved and widen the Nordic filmmaking community to include Iceland (where before DS was primarily Scandinavian.) He spoke passionately about his desire to bring more VFX work back home to Iceland. Framestore Iceland has doubled in size within 3 years of operation. Globalization within the VFX industry enabled him to direct a commercial in Iceland for an agency in London with a client in China. They never met in person, yet Dadi delivered the spot in four weeks with previs animatic the first week and the rest followed --all online.

(Latest news is that Icelandic director and actor Baltasar Kormakur has bought the Icelandic Framestore branch in partnership with Dadi Einarsson.)

Stand-out key presenters included Alpo Oksaharju and Mikko Kallinen, from Finland. They are the 2-man development team of Theory Interactive, a new indie game company who combined forces to produce Reset, a single-player, first person puzzle game with a time-travel convention. They have developed their own real-time game engine PRAXIS, and in the process, created a cinematic teaser trailer to test their work.

The lighting and texture are beautifully rendered, giving the trailer extraordinary depth and emotion, and this has set core gamers on their ear. It has also triggered buy-out offers from larger companies, which Alpo and Mikko have refused. They have pursued a creative dream on their own terms and prefer to keep it that way. There is no set release date for the much-anticipated game.

Participants in the "VFX Omelet" (short showcase segment) included Norwegian "regulars": Storm Studios, Gimpville, Qvisten Animation, Stripe, Electric Putty, Netron, Placebo Effects and newcomer Framestore Iceland Studio.

The list of high-quality and eclectic presentations:

Contraband (feature film) show and tell -Supporting visual effects from Iceland -

Dadi Einarsson, Creative Director/VFX Supervisor, Framestore Iceland

Creating Digital Water: The process of creating simulated water and oceans and the problems to be aware of -their work on Kon Tiki - Magnus Petterson, Lead Effects, TD Storm Studios

Stereoscopic Set Extension: Behind the scenes of the thrilling mine-cart sequence in the feature film Blåfjell 2. - Lars Erik Hansen, VFX Supervisor/Producer, Gimpville

Twigson in Trouble: a brief talk about the VFX that Qvisten Animation did on Twigson 3-

Martin Skarbø, VFX Supervisor and Morten Øverlie, Animation Supervisor, Qvisten

Hugo’s There – The Unbelievable Truth: Adding a little character with e>motion. Peter Spence, VFX Artist , Electric Putty

Spence made reference in his presentation to the [5D] production mandala originally conceived by Alex McDowell, 5D Founder and Creative Director, to illustrate modern filmmaking -- a circular chart that shows overlapping input from all departments, with design at the core. In Spence’s version as creator and sole executor of Hugo, it was himself on the mandala at beginning, middle and end. He does it all, another example of creative vision pursued on the artist’s own terms.

|

| MODERN FILMMAKING PROCESS |

Fredriksten Fortress – 350 years in eleven minutes: The history of Fredriksten Fortress, an outdoor projection on the walls of the inner fortress -Jørgen Aanonsen, Managing Director and Torgeir Holm, Creative Director for 3D and VFX , Netron

The Cows Are On Your Table: the challenge of augmenting more than 100,000 breakfast tables with a digital story -Kim Baumann Larsen, Creative Director and Partner, Placebo Effects

Keynote Speaker Sebastian Sylwan, CTO Weta Digital also 5D Founder, in his address entitled “The Evolution of Storytelling Through the Convergence of Art and Science” spoke about history of the role of art and science in service of story, leading off with Leonardo Da Vinci’s painting of Last Supper citing how artists have always used technology, in this case perspective, to enhance the telling of the visual story.

Jumping forward, in an interview for a local Norwegian online magazine Sylwan spoke about the non-linearity technology has afforded filmmakers today: “Traditionally, film production has evolved over the last 120 years to include specific tasks. Because film was expensive, you needed to plan very well what needed to be done when you were in front of the camera… Nowadays, the visual effects part of that has grown a lot, compared to where it was even 10 years ago. That growth has been accompanied by a lot of technological advancements, and those technological advancements have enabled some of the creative parameters to be extended, experimented with, brought forward, involving impulses from various areas. In short, I think we are talking about a type of virtual production now. There isn’t really a checklist in my mind or a definition of what virtual production is or isn’t, but I think it’s basically about using virtual tools in order to make films. So it’s really production with the best tools available, and those tools are able to enable communication between creative figures in the film making process that would normally not talk to each other.”

Sylwan also presented a Weta show-reel and took numerous questions about their past projects including Rise of the Planet of the Apes, which highlighted Weta’s advances in facial performance capture, particularly the eyes.

The final Key presenter was Swedish VFX studio Fido a company that specializes in creatures. Originally 4 companies, (4 people) merged to form Fido in 2005, and have kept the same staff and added 18 artists and have 45 workstations for peak times. They are recently acquired by film production company Forestlight groups are sister company to Noble Entertainment- one of the largest distributors in Scandinavia. FIDO balances longer-term feature work with shorter-term commercials and use the same teams for both. There are no department splits. Fido has a very-well developed fur and feather pipeline as well as a system for creating realistic ocean surfaces.

Claes Dietmann, Producer at Fido, presented a show reel featuring Fido’s impressive VFX work on creatures on 3 production: German feature Yoko, Underworld Awakening, and Kon Tiki.

Yoko is a children’s story of a young yeti (Himalayan snowman) with magical powers.

Staffan Linder presented the werewolf transformation they created for Underworld Awakening, a film project they started, then lost to Canada and were later awarded the transformation animation sequence again. They created a horrific transformation that was a success in being unique among all other werewolf transformations before seen.

Mattias Lindahl presented Fido’s creation of the larger-than-life whale shark that attacks the raft in Kon Tiki. They spent a year on this film - which will have a U. S. release without subtitles. Each scene was shot twice; once in Norwegian and again in English.

The incredible growth in Nordic VFX production in recent years and following the success of films such as Max Manus (Made entirely with Norwegian VFX talent), Troll Hunter, and Kon Tiki, make Digital Storytelling Seminar not only interesting and informative, but also alot of FUN and my thanks to my hostess Angela Amoroso, for without her hospitality my attendance would not have been possible.

Subscribe to:

Comments (Atom)